EDITOR'S NOTE: This story originally appeared in the August issue of GCU Magazine. View the digital version of the magazine here.

Story by Mike Kilen, Mark Gonzales and Lana Sweeten-Shults

Dressed in purple Grand Canyon University scrubs, stethoscope dangling around her neck, Mira flashes a reassuring smile.

Reassuring is good at this point.

It’s 1 a.m., your biology exam is in seven hours, the Academic and Career Excellence Centers are closed, and your professor is tucked safely in bed.

“How can I help you today?” Mira asks.

You zip off your question: “How does metabolism work?

“Great question!” Mira is 100% thrilled to fill in the gap. “Metabolism is like your body’s engine, responsible for converting food into energy. Let’s break it down, step by step.”

She rattles off, in neat, numerical order, with added bullet points: ingestion, digestion, absorption, cellular metabolism, catabolism, anabolism.

To make sure you’ve got it, she follows up with: “Which phase of metabolism involves breaking down food into simpler forms?”

Your volley: “digestion.”

“That’s correct!” she says. “You’re doing awesome.”

You would give her a hug . . . if you could.

But GCU’s science and health care assistant isn’t human. It’s actually the university’s first artificial intelligence chatbot, rolled out in summer 2023 to help prenursing students with prerequisite science courses.

This fall, she’ll be introduced to ground students, tutoring them on everything from Biology 201 and 202 to Chemistry 101.

And she isn’t the only chatbot at GCU.

Plans are for math tutor Isaac, named after Sir Isaac Newton, to debut in the fall.

Dr. Mark Wooden, College of Natural Sciences dean, and Dr. Lisa Smith, College of Nursing and Health Care Professions dean, knew exactly what they wanted to do when GCU’s faculty was inspired to weave AI into the classroom.

“We were just rolling out our science courses online and wanted to assure that students had all the resources they could have available to be successful,” Wooden said.

An AI tutor seemed like the perfect first step, an academic assistant that could be available 24/7, even at 1 a.m., when the academic help centers aren’t open or your nicely tucked-in professor isn’t awake.

And who better to pave the way than one of the fastest growing populations: prenursing students in the midst of their prerequisites?

An academic leadership team worked with multiple departments and colleges to develop, test and make Mira happen.

Nursing faculty knew that students struggle with chemistry and anatomy I and II, and that if they don’t do well, they don’t get into the nursing program or change majors.

“So if we’re going to help with the nursing shortage and fill our ABSN seats, we needed to be even more proactive to help students on the front end to be successful in their pre reqs,” Smith said.

A tool like Mira, she added, “might be a game-changer.”

Science and nursing leaders have been pleased with the results. Student feedback has been positive enough that the number of courses for which Mira is trained to tutor is being increased from 10 to 25.

The math department is hoping for the same kind of success with Isaac.

Department leaders turned to a GCE software-development whiz to fashion a way to help introductory-level college algebra students.

“That course is a struggle point for students, so we were aiming for those areas,” said chatbot architect Nathan Harris, manager of Grand Canyon Education’s Academic Web Services, the department responsible for building GCU’s AI academic assistants. Though, “We are not floating in the area of differential equations yet; we are trying not to overextend them.”

Stuck on an algebra equation? Isaac can help.

“Isaac will walk them through the problem to help them understand the steps,” he said. “It’s important to remember, this guides learning and doesn’t just supply the answer.”

That’s a vital element in the development of these AI teaching assistants: They don’t give students the answer; rather, they teach students the process to find the answer.

“You NEED a student to think and come up with the answer themselves,” Wooden said. If you just want an answer – one that may be questionable coming from open-source AI, which just pulls everything from the internet, right or wrong – “that’s not what this is.”

Miranda Hildebrand, Academic Web Services executive director, said, “We just see such great opportunity there and are super optimistic that we can actually truly help students in a way that normal technology may not be able to.”

She added that these GCU-specific chatbots offer students something open AI doesn’t – security. Third-party chatbots will use your data to train their future AI models (unless you tell them not to). GCU’s chatbots won’t.

Mira and Isaac aren’t the university’s only venture into leveraging AI to teach students.

GCU President Brian Mueller has said that, just as the university pioneered online education 15 years ago, it’s determined to be a leader in artificial intelligence in the next decade.

“There’s so much more we can do for students with generative AI,” Hildebrand said. “What we learn with these tutor chatbots will help lead us down a pathway of greater study tools and resources that will help students truly be able to elevate their learning.

“This is just the tip of the iceberg.”

Embracing AI

Comets hitting Earth’s surface are called impact events.

Little did anyone know that AI virtual assistant ChatGPT would be this generation’s comet, quietly arriving on Nov. 30, 2022.

The world was in awe; the academic world was in a panic.

There was a fear, when ChatGPT made its debut, that students were going to use it to write their essays or do their math homework. That “it was going to be the biggest destructor to the integrity of our academics since the calculator, since Wikipedia, since Google searches,” said Honors College Dean Dr. Breanna Naegeli, who witnessed discussions start to bubble up about AI and academic integrity in the committee she leads, the Character and Integrity Committee.

The gut reaction was to ban it, throw up firewalls, create strict-repercussion policies.

But after that initial scramble, it became clear AI wasn’t going away. Some 200 new AI platforms emerge each month, Naegeli said, so GCU needed to embrace it.

More than that, Mueller intended for GCU to revolutionize teaching using AI. To become leaders in AI.

“Our talk became about taking a more proactive approach. How do we create a positive culture around integrating AI into higher education? How do we support faculty?” Naegeli said. “We need to shed light on the positive uses for AI and how students can use it in a positive and productive manner and as an effective learning tool — not just an answer bot.”

Naegeli’s committee created policies, discussed how to enforce them and talked about AI and the university’s code of conduct.

“We’re still talking about AI because it impacts academic integrity,” she said.

Ethical implications

And it also highlights character issues.

The Canyon Center for Character Education developed an asynchronous professional development tool called Character and AI in Higher Education, scheduled to be available in the fall.

“There are ethical implications when it comes to using artificial intelligence,” said Emily Farkas, the center’s program director. “We know it is an easy platform to plug in and easily get an answer. That’s OK, as long as you are citing AI or using that information to dig a little deeper in whatever context you are writing about.”

There are multiple ethical scenarios to consider. It’s why Sean Sullivan has come up with a way for AI to assist in learning about those ethical scenarios. As director of Curriculum Development and Design, he works with numerous university departments on its integration.

His professional development course for the character center not only covers the basics of how to use AI in character education but includes activities such as ethical dilemma situations. For example, a business course could prompt a chatbot to create scenarios that would integrate character education into the topic, he said.

“A big part of the session I just created is also learning how to detect bias in AI and verify for accuracy and not ever use AI as a replacement for the human experience,” Sullivan said. “AI lacks nuance and doesn’t understand reasoning the way a human does. It will give you a sweep of the internet without any context around it. It is up to the human to then take and decode it and translate it into something meaningful and accurate.”

GCU faculty and staff have advanced the use and understanding of AI at more than 70 conference and training presentations since 2021. "We were told, you guys are way ahead of the game."

— Rick Holbeck, director of online instruction

While the character center and the Character and Integrity Committee continued to plug away at ethical concerns, ChatGPT’s arrival made clear that the university needed a group to navigate AI in the realm of teaching and learning.

That think tank, the Generative AI Committee — an offshoot of the Character and Integrity Committee — identifies different AI tools to share with faculty, shares best-practices, discusses how to integrate AI into the curriculum and reviews student and faculty feedback.

“We’re just constantly learning new tools that we can use for teaching and learning,” said Executive Director of Online Instruction Rick Holbeck, who heads that committee.

GCU faculty shared that knowledge with educators across the country at the AI-themed virtual LOPE Conference in June.

And the university’s faculty and staff have advanced the use and understanding of AI at 74 conference and training presentations since 2021, including at the prestigious Higher Learning Commission Conference over the summer, where it became obvious GCU was an innovator in AI and teaching.

“We were told, you guys are way ahead of the game,” Holbeck said.

GCU leaders would agree, the university needs to be.

Business of AI

“When you think about it, AI is going to be the greatest productivity and creative tool known to man,” Colangelo College of Business Faculty Chair Greg Lucas said.

Bold statement.

It’s why Lucas is so keen on pushing AI into the classroom.

In March, he conducted a fundamentals of AI session so students could earn a Google Cloud skills badge in generative AI – a type of artificial intelligence that uses machine learning to create new content.

“We had a lot of response,” said the college’s dean, John Kaites. “Now it’s going to be mandatory and part of the basic technology classes you take at the Colangelo College of Business.”

Lucas, a regular contributor at Generative AI Committee meetings, also created a series of AI initiatives in the college, from providing opportunities to students to earn digital badges, to implementing degree programs, such as an MBA with an emphasis in AI.

“Our students really need to be experts in the operation of AI,” Lucas said. “It’s really easy to think that AI courses and AI curriculum belong in the technology college, which isn’t true.

“It extends into every aspect of college business. . . . In the CCOB, we use so many business-specific tools, including Microsoft Office Suite, and all that’s going to have AI built into it. So we need to make sure our students know what AI is, and they know how to use it.”

Artificial intelligence already is part of Introduction to Computer Technology, one of the first classes in which business students are enrolled.

Kaites and Lucas emphasized how many times employers tell them about the importance of graduates understanding how to work with AI, and students and parents already ask Kaites, “How are you integrating AI?”

“It’s going to be a key to our success,” Kaites said. “… Our goal is for them to be able to work in a world that is changing rapidly — and we intend to change rapidly with it.”

AI Skills Lab

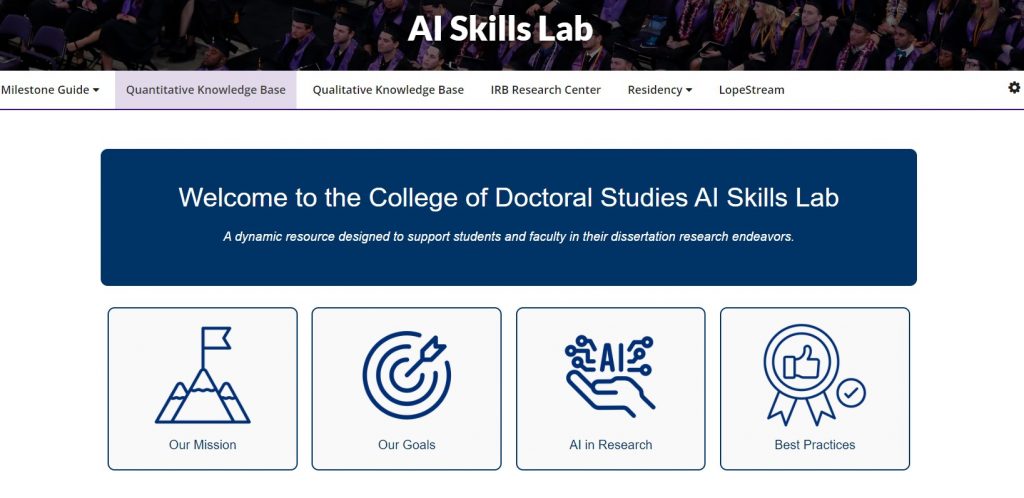

In the College of Doctoral Studies, a 12-person team helmed by Dissertation Program Chair Dr. Kenneth Sherman came up with the idea of creating a virtual AI Skills Lab, a website where students and faculty can access tools to assist them, as a tutor or reviewer, in their dissertation research endeavors.

“… I’m going to give you two key words on this — ethically and responsibly — which is sort of the cornerstone of our lab.”

It includes an eight-minute video in which an avatar shows students how to use the website’s prompts, along with access to the Student Success Center’s AI Resource Center, which delves into ethics, AI do’s and don’ts, GCU’s Statement on AI and a section on citing sources.

The importance of using as many resources as possible to help students and faculty was not lost on Dr. Nicholas Markette, the college’s assistant dean.

“President Mueller has declared, we’re going to leverage AI in ways that other universities haven’t,” Markette said. “Dr. (Randy) Gibb, our provost, has charged us with creating applicable skills that make people more valuable in the marketplace. Through

GCU faculty and staff have shared their knowledge about AI-driven learning at more than 70 conferences and educator training events since 2021. “We were told, you guys are way ahead of the game.”

Dr. Sherman and his team, they’re accomplishing both of those charges.

“And then at the same time, one of our core values in the college … is innovation. And this is an indication that we’re true to our values, as well.”

Counseling evolution

As a counselor, Dr. Jennifer Young was long interested in technology in previous jobs.

After coming to GCU, she explored using “virtual humans” for role-playing sessions with counseling students way before the AI boom.

“Everyone was like, ‘What are you talking about?’ Now, because of the availability and publicity with AI, there is a lot more interest,” said Young, who wrote the paper “AI Reflective Practice and Bias Awareness in Counselor Education” in the Association for Counselor Education Supervision Teaching Practice Briefs.

Young, director of the doctorate in counselor education and supervision, wrote that ChatGPT could be used in simulated counseling scenarios with doctoral students. Those students could receive instant feedback, including when it comes to possible biases.

The possibility of bias is important to spot while training counselors, whether it’s racial, gender or class, and role playing is a common training tool to spot it.

“That conversation can be very difficult,” she said.

But using AI to analyze the session gives them a more comfortable tool that can “take a lot of the pressure off and some of that perception that they could be judged and allows them to reflect on it later.”

Young has researched the implementation of that tool, though curriculum revisions would need to be approved before it becomes a regular part of counselor training.

She said AI could eventually help ease the shortage of counselors, as long as confidentiality issues are addressed.

“The field will look very different in the next five to 10 years,” she said.

Classroom successes

Many other faculty are leveraging AI in countless ways in their classrooms.

English instructors in the College of Humanities and Social Sciences ask students to use AI to brainstorm ideas, and department chair Dr. Maria Zafonte provides guidelines for writing papers, such as applying it to “prewriting and polishing rather than the actual writing itself.”

In the College of Education, Dr. Paul Danuser develops quizzes with AI, and Dr. Katy Long asks students to use it to develop lesson outlines and revise work for their classroom.

“We are working really hard to integrate opportunities in our programs, using AI to generate lesson plans, then critique the generation of materials to determine if it is accurate,” said Dean Dr. Meredith Critchfield. “We are trying to use AI as a critical thinking tool.

“We think of it as a guide on the side.”

Perhaps the most important guide ever to walk alongside educators.

***

Related content:

GCU News: GCU faculty a 'catalyzing force' at HLC Conference

GCU News: AI credentials could be the right algorithm for students