EDITOR'S NOTE: This is part two of a two-part series on AI anxiety and how GCU's new virtual Center for Educational Technology and Learning Advancement is helping to support AI literacy. It also focuses on how CETLA guides students and faculty in the ethical and responsible use of artificial intelligence. In case you missed it, here's part one.

Logging on to an artificial intelligence model for the first time can be intimidating.

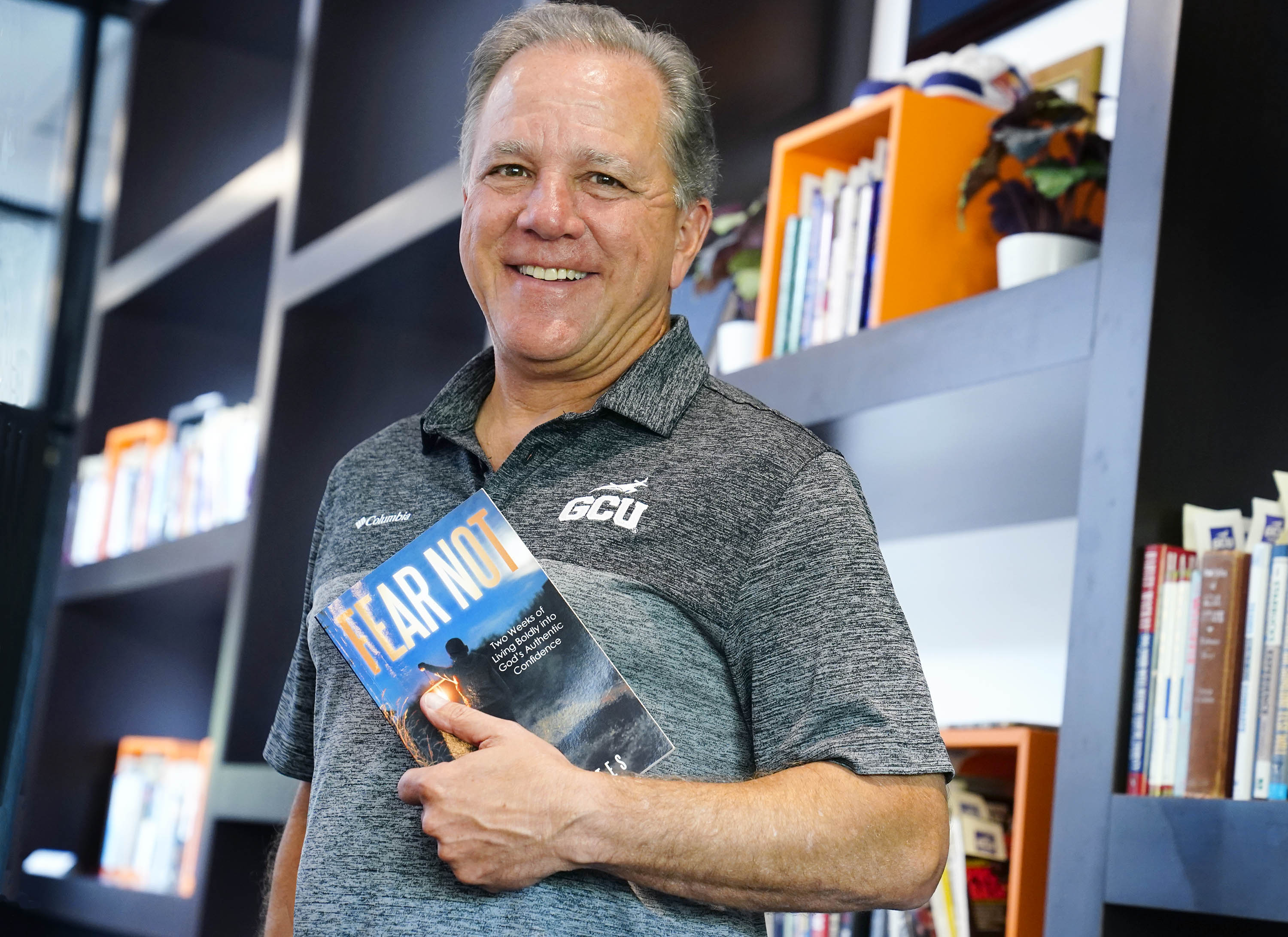

It’s why AI champions are embedded in Grand Canyon University's colleges, and in the College of Natural Sciences, that’s Professor Dr. Mark Wireman and instructor Scott Rex.

Those in the role serve as conduits for consistently implementing the policies of the Center for Educational Technology and Learning Advancement, or CETLA, a virtual center introduced by GCU this year that sets clear guidelines for ethical and responsible AI use universitywide.

“I think the main angst that I've had with a couple of people is just how do you approach (the topic),” Rex said. “You think they've used AI and are trying to figure out if they understood and how they used it, and what is the best way to do that.”

AI is simply machine learning, said GCU’s Rick Holbeck, CETLA co-director alongside Dr. Jean Mandernach. It gains knowledge by consuming content on the internet without regard for facts, accuracy, sources or logic. Artificial intelligence is just the consumption of zeroes and ones in data centers or as “fed” into the system by humans. Human programming and prejudices also affect how AI learns.

“I can share (the concerns) from my daughter's perspective because she's a student here at GCU,” Wireman said. “This new policy gave her … anxiety because she uses AI for brainstorming on her essays.”

Her teacher started this year by telling students they could use AI, but it needed to be disclosed.

“(My daughter) went back and forth, back and forth,” Wireman said. “(She wondered,) ‘Should I do that? I don't want to get a zero.’ I think that's where much of the anxiety comes from, more on the student end.”

After the CETLA policy rolled out, colleges set the parameters for when and how artificial intelligence can be used. In some math and science courses, GCU offers MOSAIC, a ChatGPT-based model designed for the classes.

“(I tell students) you can't use it to write your paper, but you can use it to help rewrite your paper,” Rex said. “If you do, just tell me that you used it to rewrite your paper. I want to know how you used it so that you're being fully transparent with me.”

Another aspect of CETLA policies in the real world is demonstrating to students how AI can be used in professional careers.

In medicine, AI “listens” to conversations between practitioners and patients in generating session notes. This lets automation take on the drudgery of time-consuming paperwork.

“I mentioned that to the class, and actually, I gave it a shot,” Wireman said. “I had a meeting with a speaker and a bunch of students. AI created a summary that was spot on, (getting) rid of all the jokes about pizza or pop and whatever. All I had to do was … reformat it.”

That, he said, is precisely what’s going on in the medical world.

Rex has used AI to help train forensic science students in handling court testimony.

Artificial intelligence serves as an attorney and asks questions of the student forensic scientists. This training is essential because court cases can be affected when forensic scientists are unable to answer questions effectively during testimony. He brought that real-world experience into GCU forensic science classrooms.

“In my previous job, I was actually recommending AI to train … people,” Rex said. “We would train our forensic scientists on courtroom testimony. What I discovered is that AI is very good at serving as an attorney.”

In his first semester at GCU, he introduced AI classroom use in assignments and projects.

Genesis Vera and Christian Merryman, students in GCU's forensic sciences program, have participated in AI attorney court testimony. They said the experience was eye-opening.

The real-world AI training demonstrates to students how to use and prompt the models to extract information and the limits of relying on the system for accurate responses.

It’s a matter of trust, but verify, said Wireman. AI has a history of hallucinating. Sometimes it just makes up facts, Mandernach said.

“I tell students to double-check it,” Wireman said. “Focus on (what) makes sense.”

Rex has similar warnings for students using AI for researching case studies or other reports. This caution helps students learn the limits of AI and its results.

“(I tell them to) look at the core material,” he said. “You could end up with some really cool-sounding facts that are made up, and you won't know if you don't verify afterward. I make them cite their sources. Is it Frank's toxicology blog or the National Institutes of Health?”

The AI model is more than just a research tool. Rex believes it is a secular tool that may strengthen faith through challenge. As a machine, it has ingested religious knowledge, but AI has no particular religious preference, he said.

(I tell them to) look at the core material. You could end up with some really cool-sounding facts that are made up, and you won't know if you don't verify afterward. I make them cite their sources. Is it Frank's toxicology blog or the National Institutes of Health?

Scott Rex, AI champion in GCU's College of Natural Sciences

“I could see it almost as a way to strengthen your faith,” he said. “What would happen if you actually asked it very pointed questions about the Bible and asked it to analyze it from an AI religious perspective?”

The result, Rex speculates, might be interesting, seeing how it strengthens faith or grows understanding.

“(What happens) if you suggest to students, ‘Hey, play around with ChatGPT and see what it comes up with from a secular viewpoint,” Wireman said. “(Students could) get shook a little bit. I think that's very helpful for what Scott said is strengthening their faith … because it makes it stronger.”

The challenge for educators, Wireman and Rex included, is how fast AI is evolving.

Wireman talks of struggling last year to create images. This year, images are smooth, he said, but video is clunky. Next year, he expects video to be streamlined.

“I’m learning this like everyone else,” Rex said.

And that, more than any policy or platform, is what’s easing AI anxiety at GCU – a shared willingness to learn, question, challenge and grow. In a world where technology changes daily, the commitment to learn together may be the most powerful tool.

How AI was used in the preparation of this article

As in part one, ChatGPT was used to summarize and identify relevant quotes, then create an outline that continued the story from the completed part one. The writer modified the AI-generated, nine-section outline into four sections.

ChatGPT then was asked to pull relevant quotes from uploaded transcripts to flesh out each section. The writer either used or paraphrased the quotes in and around the narrative bridges. Comments by the forensic sciences students were pulled from two interviews for a future story on a College of Natural Sciences program.

AI wrote no content. The ethical and responsible use of AI in developing this article enabled the creation of a completely human-written story.

The story was edited through human intervention.

Eric Jay Toll can be contacted at [email protected]

Relevant stories:

GCU News: Teaching A.I.: How GCU is leveraging artificial intelligence in learning

GCU News: From rock climbing to the NBA, students display their deep learning at AI event